Project: Android Wall

How we built a display wall consisting of 140 android devices from TV to smart watches

How it all started?

I occasionally help my friend Matt from TheAlphabetCollective agency/studio in their experiential marketing projects. In 2019 they were asked via BrandFuel to help build one of the Android installations for MobileWorldCongress in Barcelona. My friends asked me if I could help in building the software side.

The requirement of the project was to play a video in loop on ~140 devices during the whole of MWC 2019 event that lasted for 4 days. The videos had to be switched between day and night.

We had about 2 months to build out the software, hardware and the frame to place all the devices. A friend designed the iron rig and it was assembled by us at the convention. This images shows the rig assembled and being installed.

Challenges and Solutions

We knew right from the start that this was not going to be an easy project. My friend and I come from a telecom background. We built together long-range WiFi to GSM networks as well as media distribution systems.

We faced following technical challenges during the project.

1 - Fragmented Android Devices and OS versions:

We were given a list of android devices that included Android TV, Tablets, Smart Phones and Smart Watches. The devices were from various manufacturers including Huawei, Samsung, Nokia, LG, Xiaomi, Motorola and a few other lesser known brands.

Android is a highly fragmented platform and we knew this was going to be a problem for software development.

Solution: Android SDK 7

We decided early on that we will have to build apps using Android SDK instead of going with ReactNative or Xamarin like tools to stay close to the OS to be able to support devices even if it meant creating various versions. A good thing about these projects is that they are short lived and you don’t have to support it for years to come. We also constrained our android app to Android 7 (API level 24) to support all the devices.

We ended up creating only 3 versions of the app mainly due to UI differences all in the same project with different UI code. TV, Mobile and SmartWatch.

Here is a picture of software displaying DEBUG screen.

2 - Connectivity

We knew from previous experience that connectivity provided by the venues in such events is never stable enough for a realtime application.

Solution - Our own LAN

We decided to build our own local network. We checked all devices that we could connect via ethernet and the rest had to go on WiFi. We created 3 networks with a mix of WiFi and LAN to provide reliability and capacity for all the devices.

3 - Content delivery to the devices

The playback on all devices had to be in sync to create a unified bigger screen effect. We went through a few approaches to get the best result.

Discarded Solution - WebRTC(RTSP) playback

We discussed for a while the possibility to stream videos to the phones using WebRTC or RTSP streaming. In this approach, the devices would connect to the streaming server on the LAN network and stream videos realtime. We discarded this initial idea as we were concerned about the connection reliability of the devices on WiFi. Moreover, the videos had to be played in a loop and if one device started a second later to play the video due to its Hardware speed, the video would have been off by a second and over time, would have gone completely out of sync.

Solution - Pre-loaded files

We decided to pre-load video files on the devices as they were not going to change that often. We also decided that we would get the videos cropped for each device for its position on the grid. This way the video size would be smaller and software would not have to crop the video realtime/offline. This info was provided to our video editing partner who created the grid in Unity3D to crop and export the videos.

4 - Playback position deviation

We had the local files playback idea but we still had to make sure that devices played files synchronously and didn’t deviate over time.

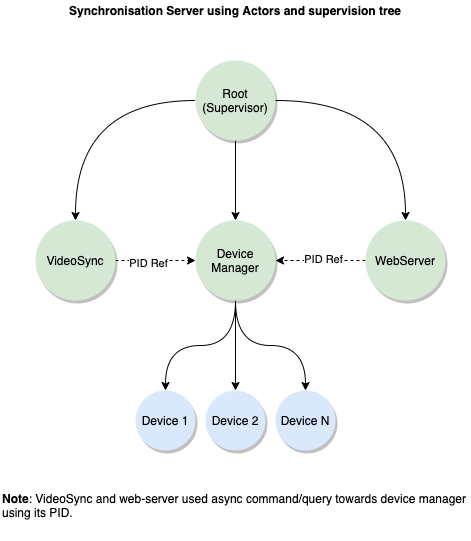

Solution - Central Synchronisation Server

The solution was simply to fake video playback with a timer on the server and broadcast the expected frame of the video to all the devices. Devices would then seek the playback to the correct position. If it is done consistently the users would not see the difference. The accepted deviation in the playback of all the devices was set to within 50-100ms.

This server also acted as web server and controller of devices allowing us to upload new videos with a single command and switch between night/day videos.

5 - Device Time

It seemed simple at first to just broadcast a packet to the devices forcing them to seek but not all packets would reach the devices the same time. This meant that network latency even local could affect the playback as a device reading the packet after 1 second would seek 1 second later and hence will be always behind.

Solution - NTP

We added timestamp of the server to each playback position broadcast packet. This meant that now devices could calculate the latency and account for that in the playback seek. This could only work if all the devices had their timing synced from a single source. We noticed that Android devices had a few seconds time skew. We decided to setup NTP server on the same synchronisation server and make sure that devices used it to sync their timing. Android (at that time at least) didn’t have the option to set custom NTP server and we ended up using TrueTime library for this.

6 - Transport protocol for broadcasting

Early on it seemed plausible to just use UDP broadcast from server to send the new position to all of the devices. This proved very difficult due to different versions of OS and security implementation by manufacturers.

Solution - TCP sockets with Actor Model

After all failed with UDP, we decided that TCP sockets were the best way to manage devices. The devices would act as clients and connect to the synchronisation server configured in the app. We decided to use GO as the language for the server side. To keep track of the devices connected we decided to use Proto.Actor framework. This way we got the goodness of supervisors and stateful actors to manage each device’s life cycle.

7 - Irregular Keyframe positions in the video

Video encoders optimise the video size by placing keyframes only when necessary. We realised that seek function tries to seek to the closest keyframe instead of the exact location specified. This caused the videos on devices to go out of sync by a second or more, sometimes.

Solution - Export videos with consistent keyframes

We requested our video editing partner to export keyframes at consistent positions ignoring the size optimisation. We didn’t care about the size as we were going to pre-load the videos anyway. This was the last key in keeping video playbacks synchronised.

Final Result

It took us about 3 days of working 16 hours at the site to assemble and test the whole system.

This is how it looked like at 4 am before the event in the morning.

TL;DR

Built app for Android 7 the lowest supported Android OS on the devices.

Own private network with mostly Ethernet for connectivity.

Pre-loaded files on the devices pushed using the central server also running on the LAN.

Used TCP sockets instead of UDP broadcast.

GO with Proto.Actor for synchronisation server.

Local NTP server for device time synchronisation.

Consistent keyframes in the video.

Expected playback position broadcast from sync server to all the devices to keep videos in sync.